16 KiB

Migrate a MySQL/MariaDB database to Open Telekom Cloud RDS with Apache NiFi

Apache NiFi (nye-fye) is a project from the Apache Software Foundation aiming to automate the flow of data between systems in form of workflows. It supports powerful and scalable directed graphs of data routing, transformation, and system mediation logic. We are going to utilize these features in order to consolidate all the manual, mundane and error prone steps of an on-premises MySQL/MariaDb database migration to Open Telekom Cloud RDS in a form of a highly scalable workflow.

Note

Historically, NiFi is based on the "NiagaraFiles", a system that was developed by the US National Security Agency (NSA). It was open-sourced as a part of NSA's technology transfer program in 2014.

Overview

With zero cost in 3rd party components and in less than 15 minutes we are going to transform a highly error prone and demanding use-case, as the migration of an MySQL or MariaDB to the cloud, to a fully automated, repeatable and scalable procedure.

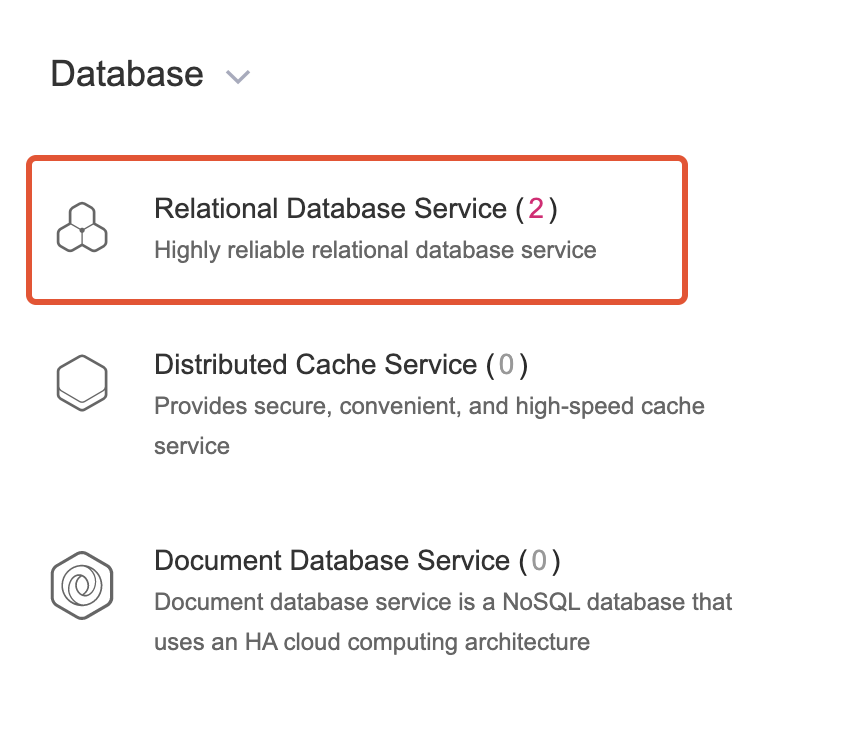

Provision a MySQL instance in RDS

If you don't have an RDS instance in place, let's create one in order to demonstrace this use-case. Under Relational Database Service in Open Telekom Cloud Console,

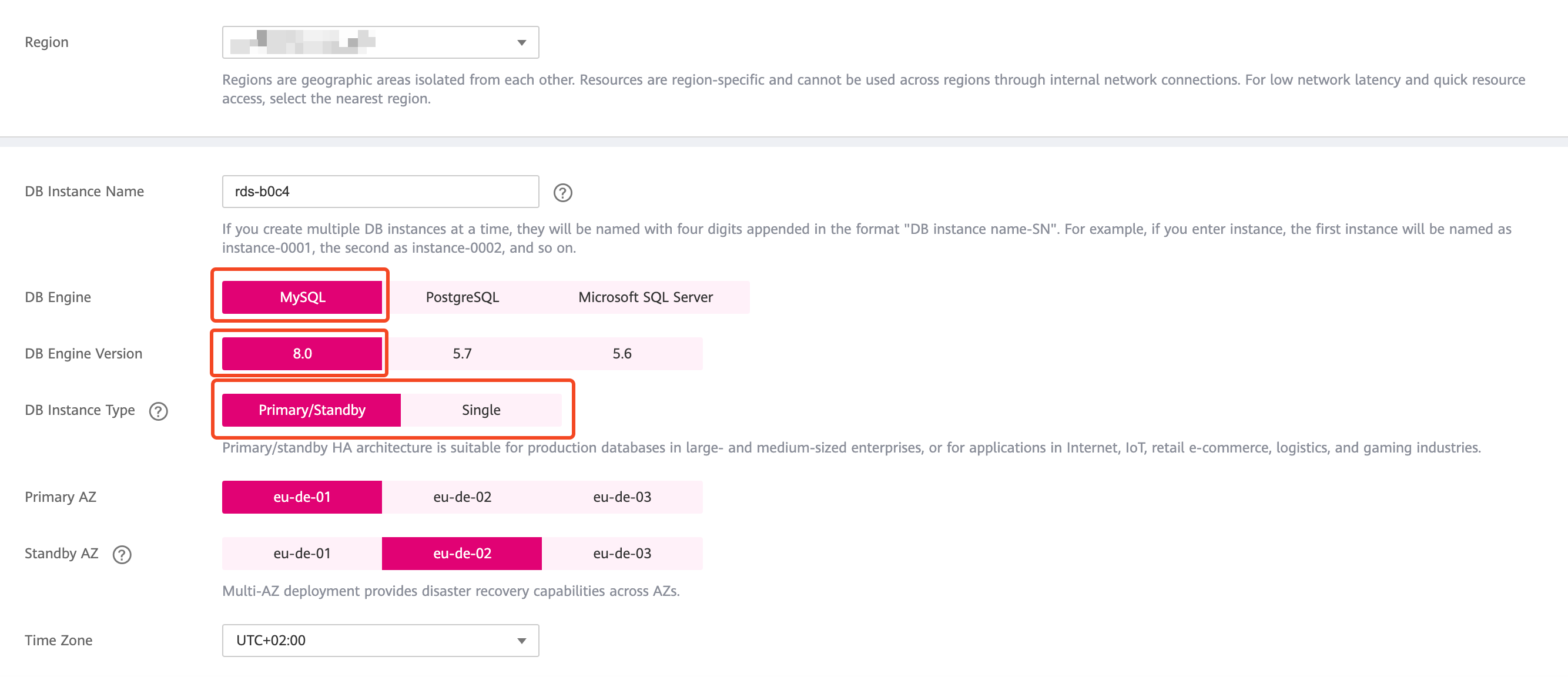

choose Create DB Instance and go through the creation wizard:

1. Choose the basic details of your database engine. You need to stick for this use-case to MySQL engine v8.0. Whether you create a single instance database or a replicated one with primary and standby instances is fairly irrelevant in regards to our use-case.

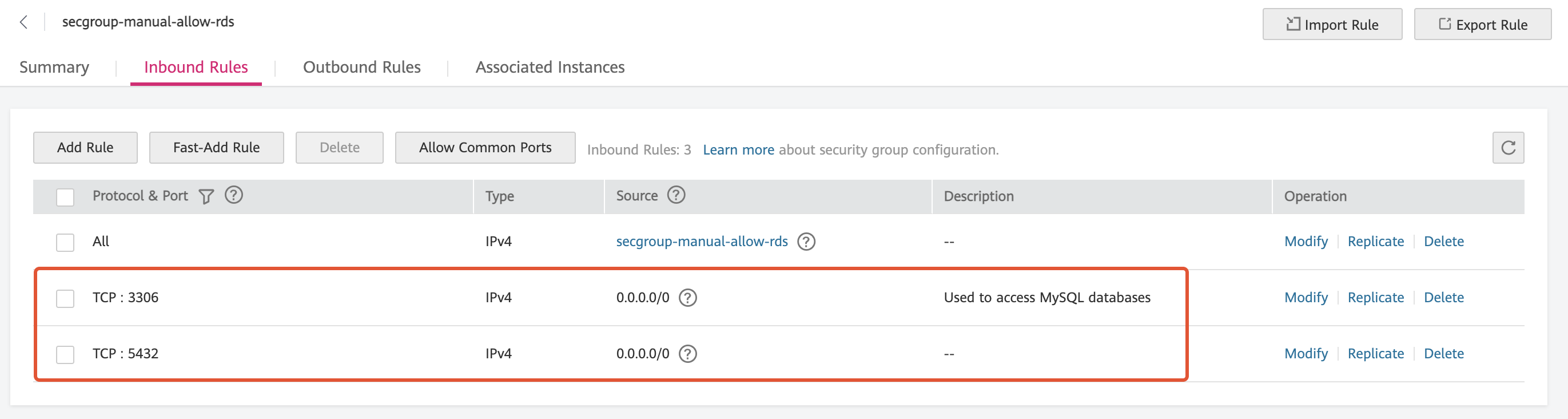

2. Create a new Security Group that will allow port 3306 in its inbound ports, and assign this Security Group as Security Group of the ECS instances of your database (still in the database creation wizard)

- After the database is successfully created, enable SSL support:

Warning

It's not recommended transfering production data without having SSL enalbed

Provision an Apache Nifi Server

We are going to deploy the Apache NiFi server as a docker container using the following command (replace first the required credentials with the ones of your choice):

docker run --name nifi \

-p 8443:8443 \

-d \

-e SINGLE_USER_CREDENTIALS_USERNAME={{USERNAME}} \

-e SINGLE_USER_CREDENTIALS_PASSWORD={{PASSWORD}} \

apache/nifi:latestand then open your browser and navigate to the following URL address:

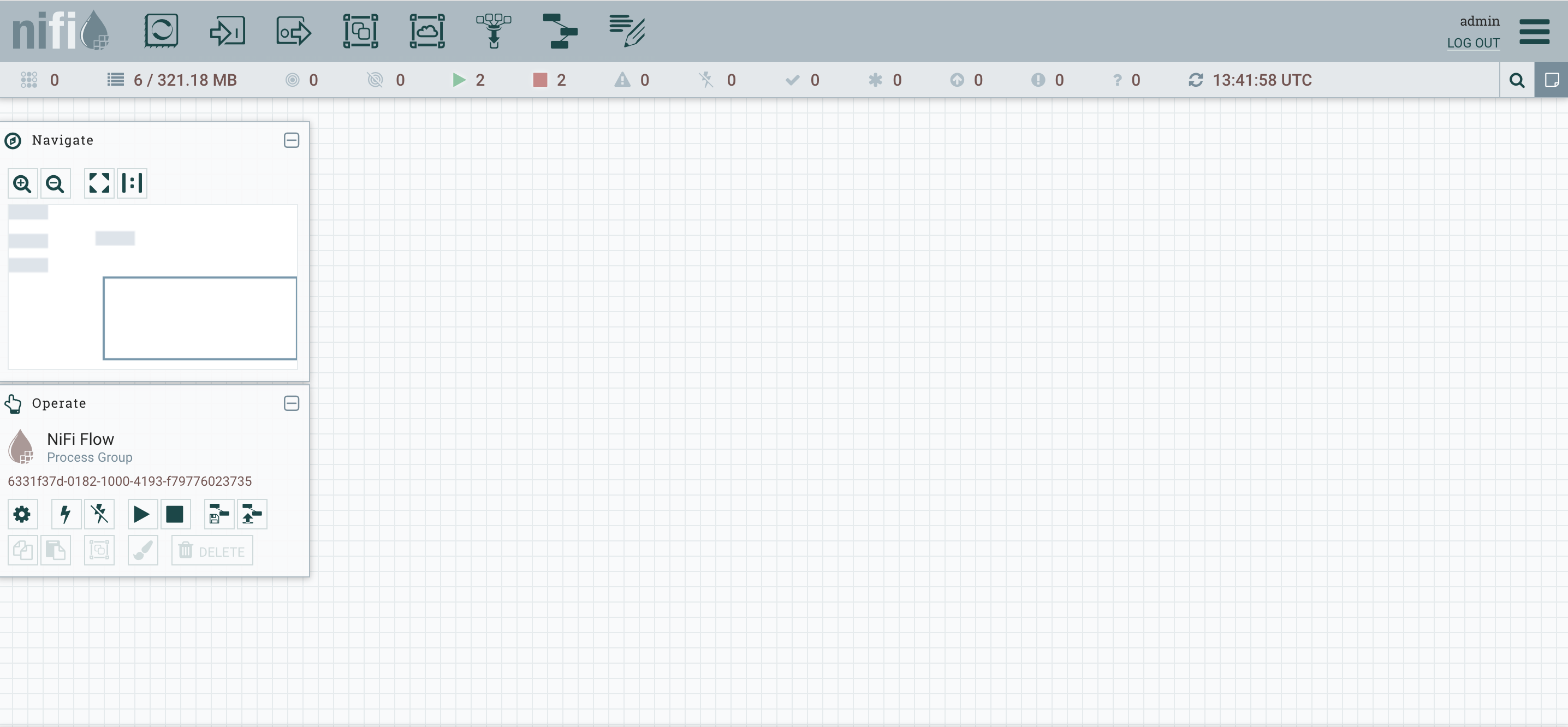

https://localhost:8443/nifi/enter your credentials and you will land on an empty workflow canvas:

Create the migration workflow

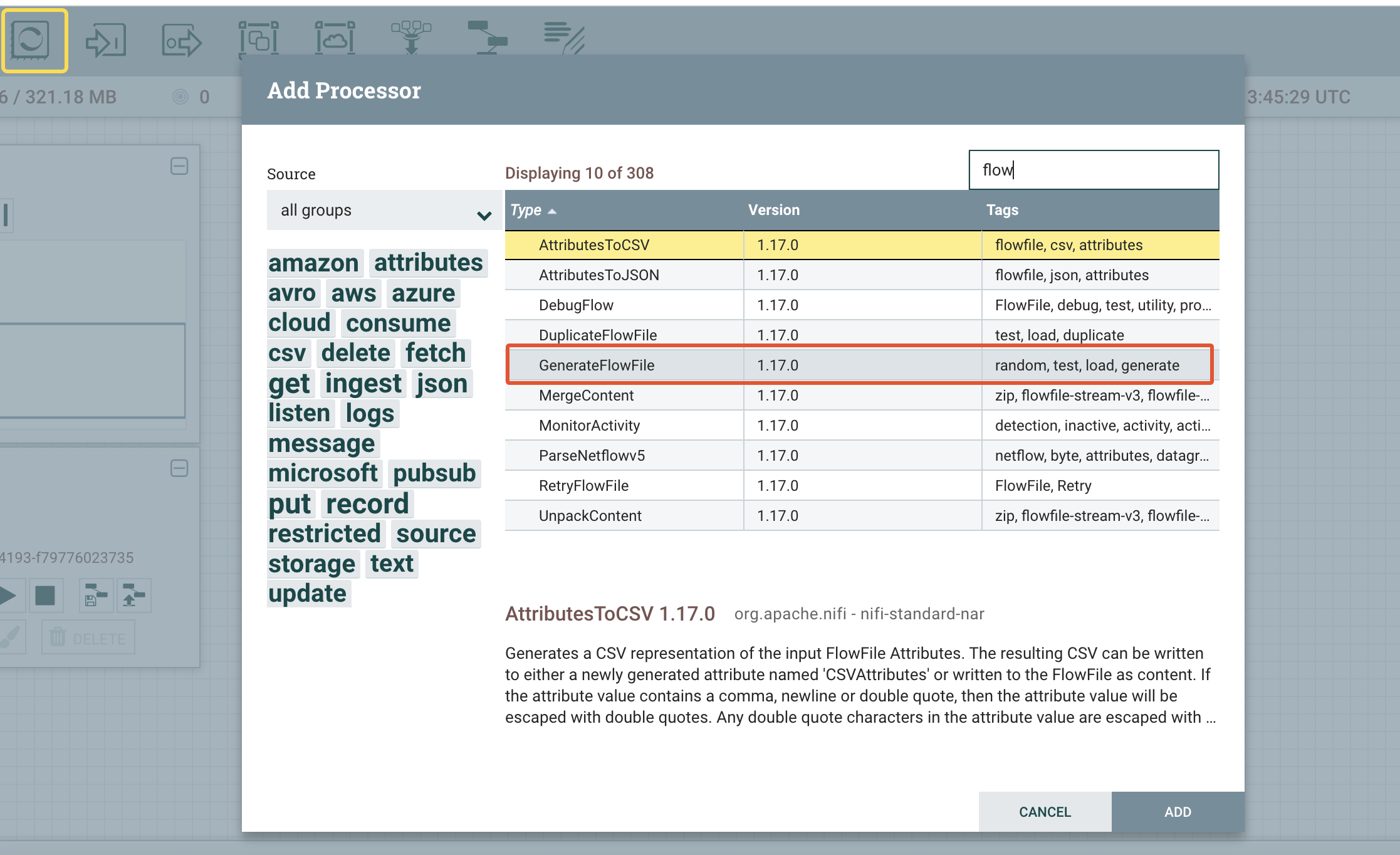

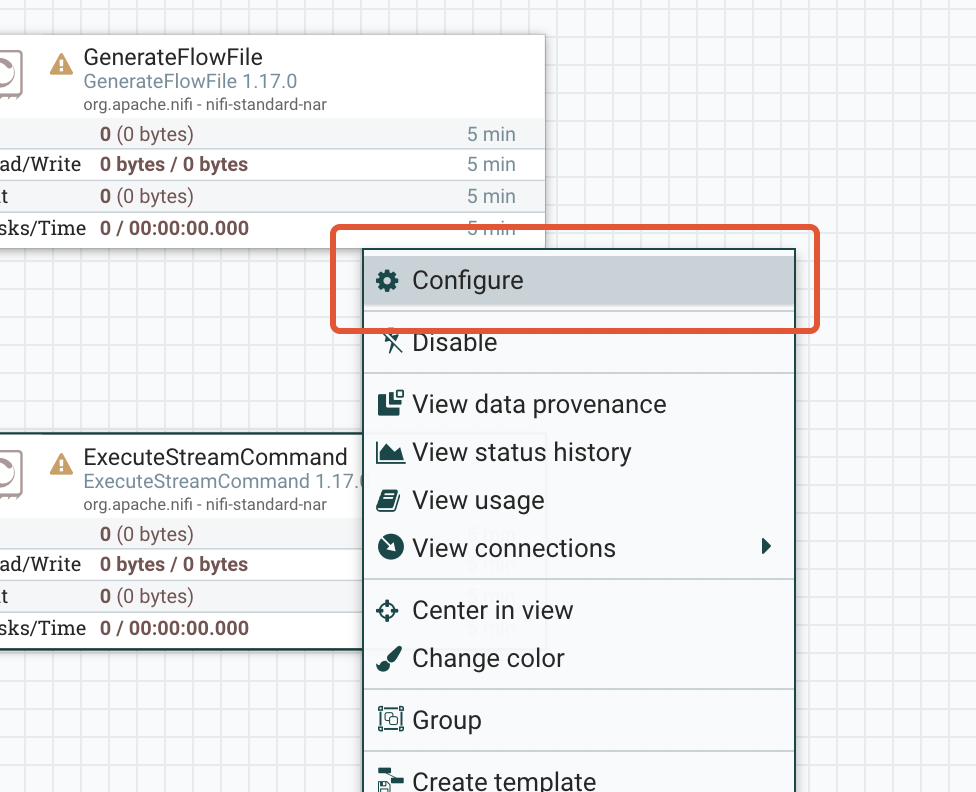

- Add a Processor of type GenerateFlowFile, as the entry point of our workflow (as is instructed in the following picture):

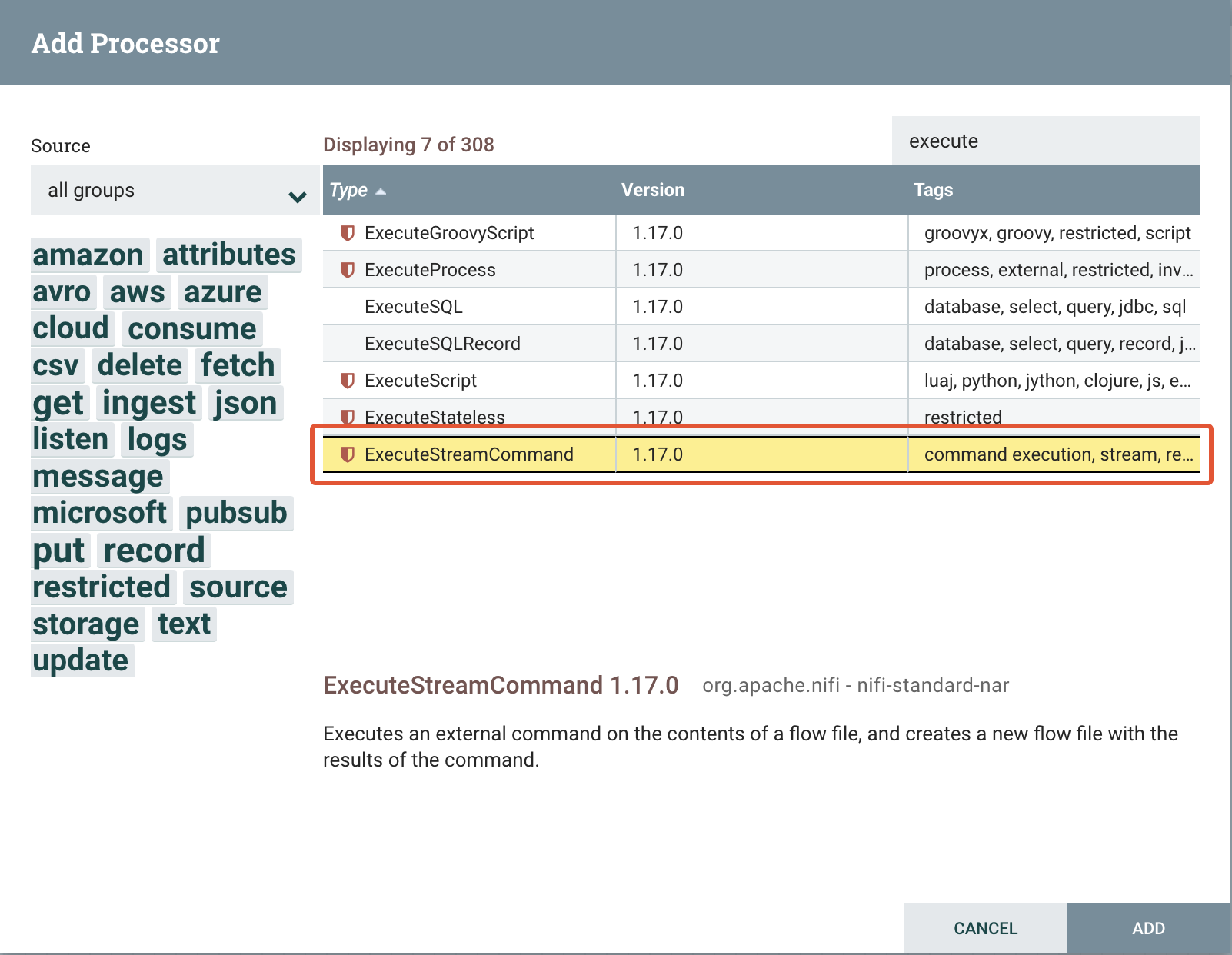

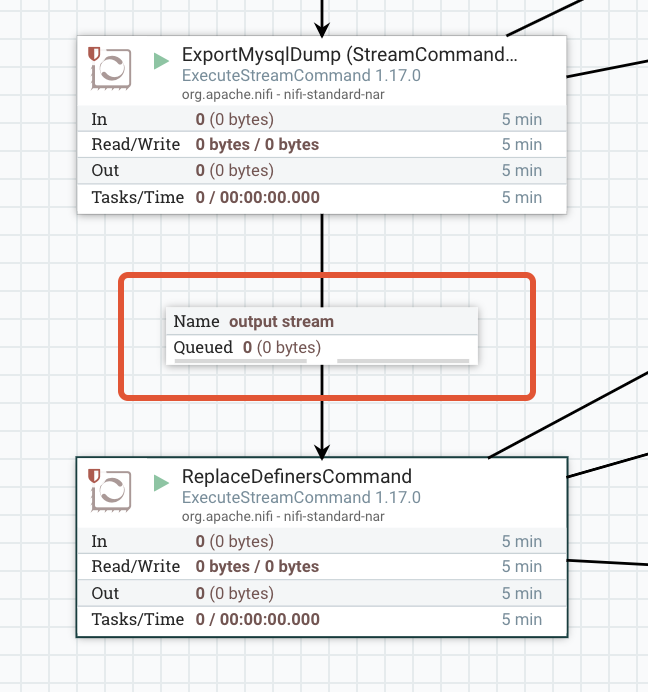

- Add a Processor of type ExecuteStreamCommand, as the step that will dump and export our source database — and call it ExportMysqlDump:

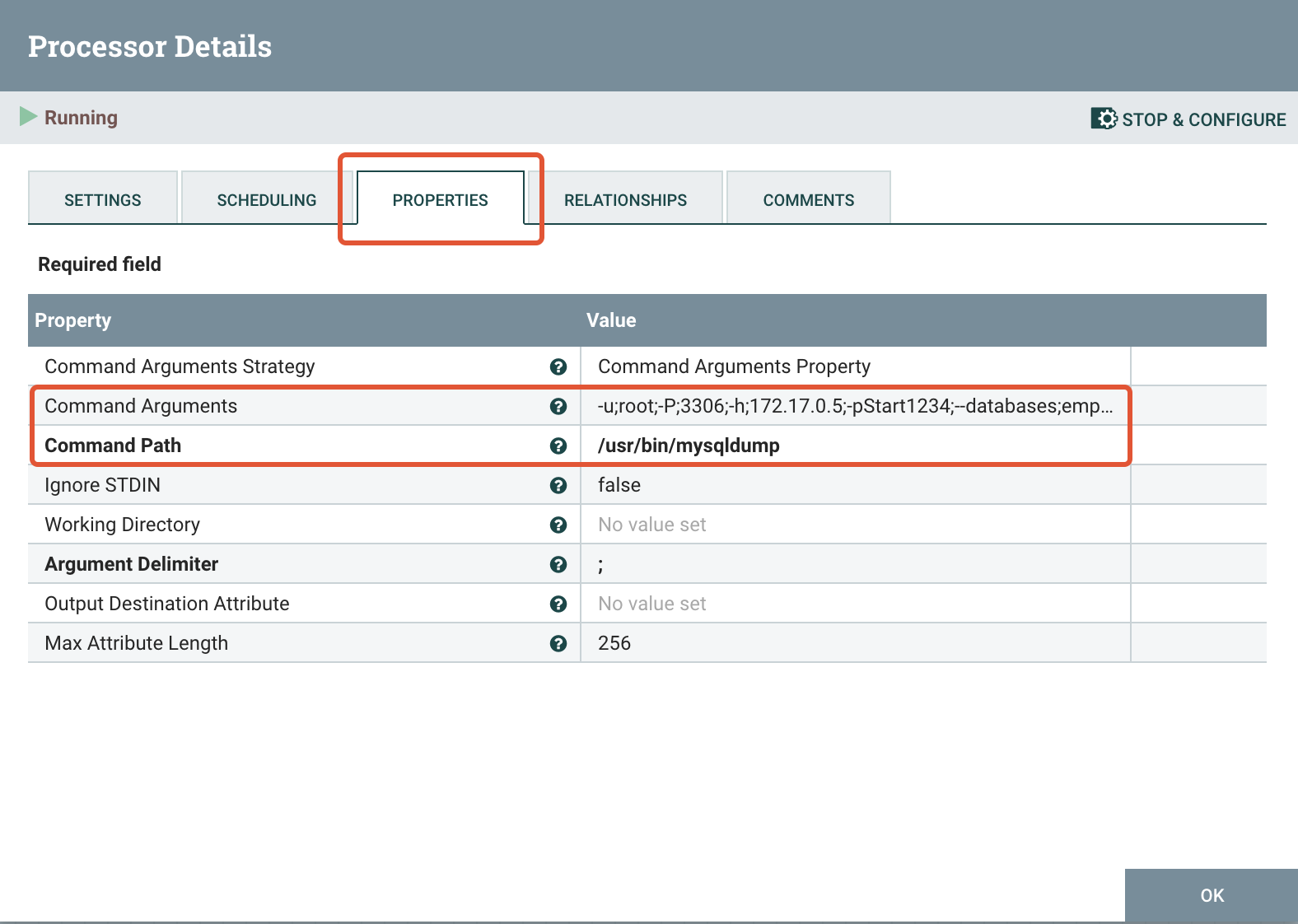

and let’s configure the external command we want this component to execute:

go to Properties from the tab menu:

As Command Path set :

/usr/bin/mysqldumpand as Command Arguments fill in the mysql-client arguments, but separated by a semicolon (replace the highlighted values with your own):

-u;root;-P;3306;-h;{{HOSTNAME_OR_CONTAINER_IP}};-p{{PASSWORD}};

--databases;employees;--routines;--triggers;--single-transaction;

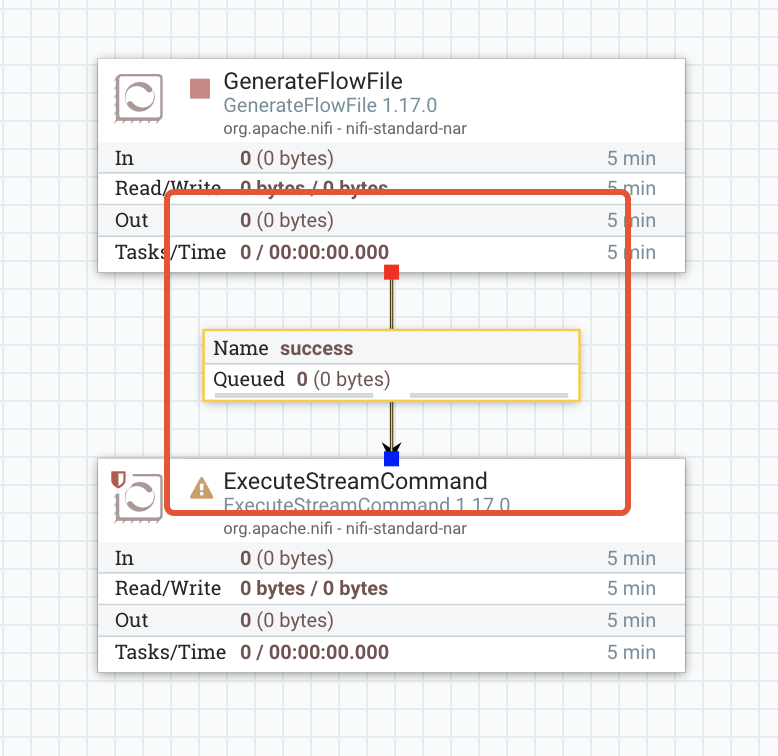

--order-by-primary;--gtid;--forceConnect the two Processors by dragging a connector line from the first to the latter. You should be able to observe now that a Queue component is injected between them:

We will see later how these Queues contribute to the workflow and how we can use them to gain useful insights or debug our workflows.

3. Open Telekom Cloud RDS for MySql will not permit SUPER privileges or the SET_USER_ID privilege to any user, and this will lead to the following error when you will try to run the migration workflow for the first time:

ERROR 1227 (42000) at line 295: Access denied;

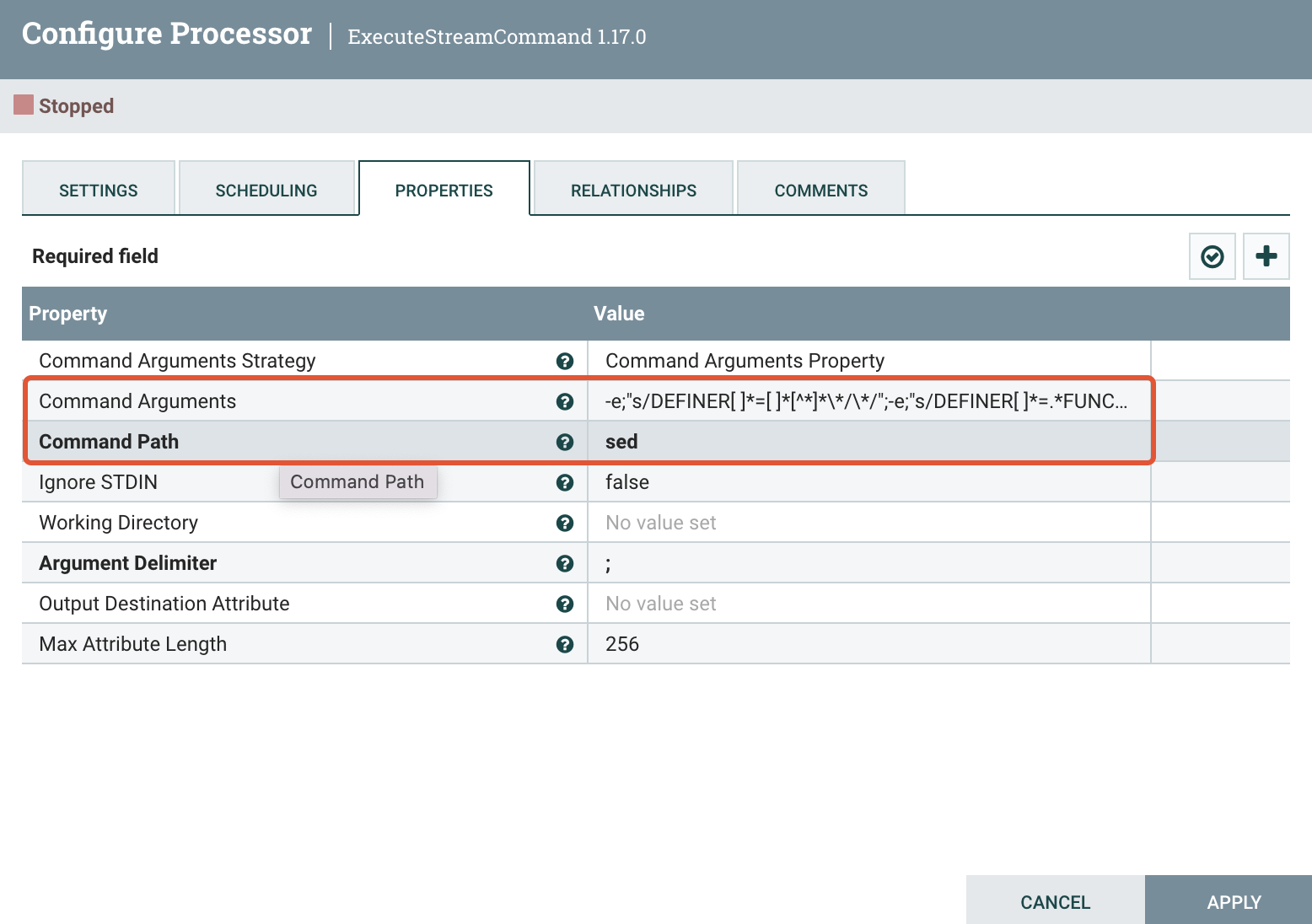

you need (at least one of) the SUPER or SET_USER_ID privilege(s) for this operationThe error above may occur while executing CREATE VIEW, FUNCTION, PROCEDURE, TRIGGER OR EVENT with DEFINER statements as part of importing a dump file or running a script. In order to preactively mitigate this situation, we are going to add a second Processor of type ExecuteStreamCommand. This Processor (let’s call it ReplaceDefinersCommand) will edit the dump file script and replace the DEFINER values with the appropriate user with admin permissions who is going to perform the import or execute the script file.

As Command Path set :

sedand as Command Arguments (in one line):

-e;"s/DEFINER[ ]*=[ ]*[^*]*\*/\*/";

-e;"s/DEFINER[ ]*=.*FUNCTION/FUNCTION/";

-e;"s/DEFINER[ ]*=.*PROCEDURE/PROCEDURE/";

-e;"s/DEFINER[ ]*=.*TRIGGER/TRIGGER/";

-e;"s/DEFINER[ ]*=.*EVENT/EVENT/"Connect the two ExecuteCommandStream Processors, by dragging a connector line from the first to the second. You should be able to observe now that a second Queue component is added between them on the canvas.

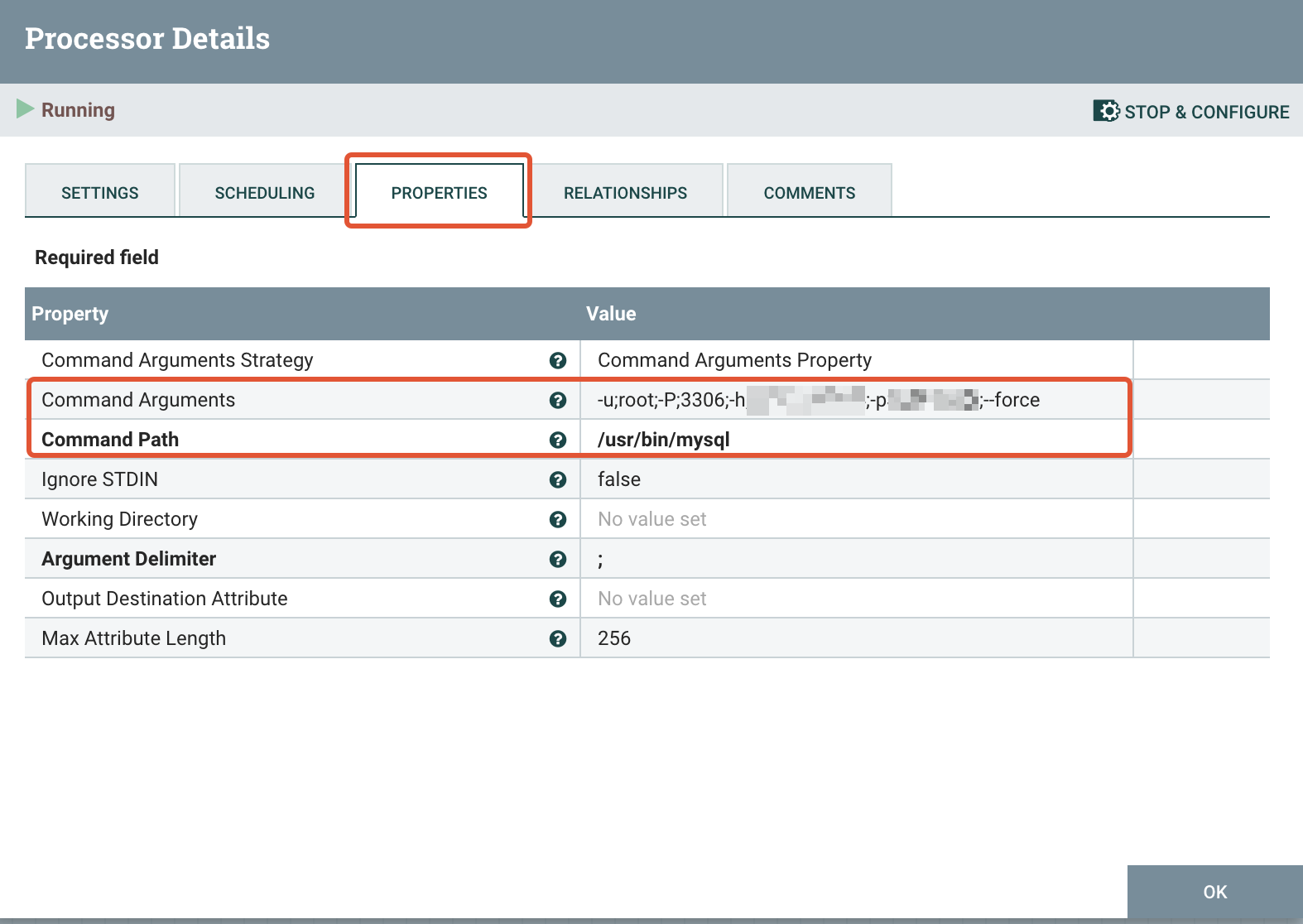

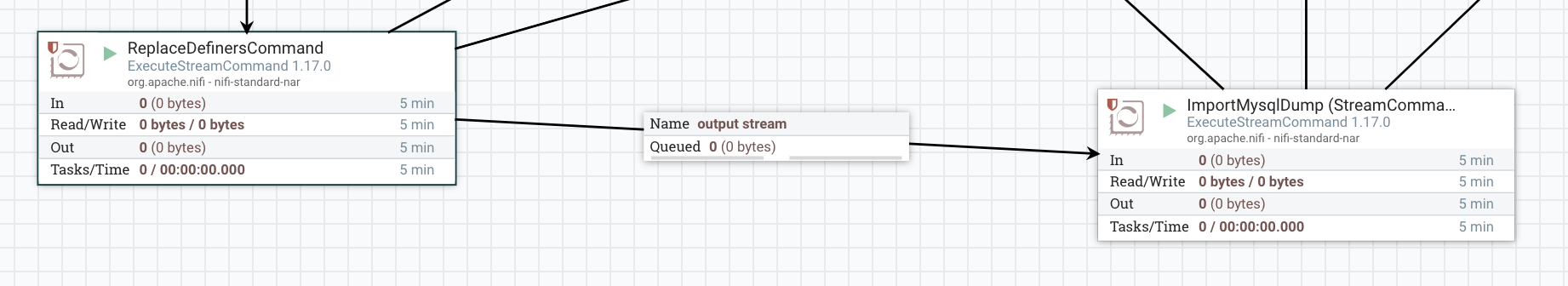

4. Add a third Processor of type ExecuteStreamCommand (same drill as with ExportMysqlDump). This step will import the dump to our target database — call it ImportMysqlDump. Let’s configure it:

As Command Path set :

/usr/bin/mysqland as Command Arguments (in one line):

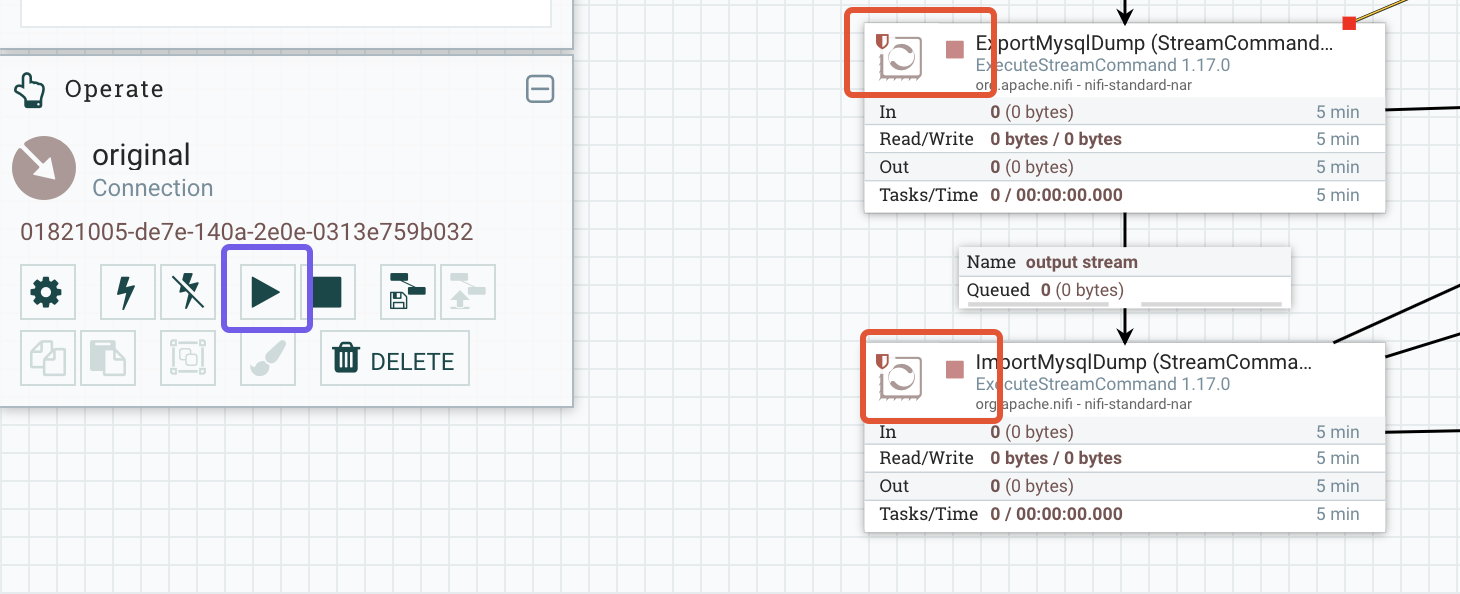

-u;root;-P;3306;-h;{{EIP}};-p{{PASSWORD}};--ssl-ca;/usr/bin/ca-bundle.pem;--forceConnect the ReplaceDefinersCommand with this new Processor, by dragging a connector line from the first to the second. You should be able to observe now that a second Queue component is added between them on the canvas:

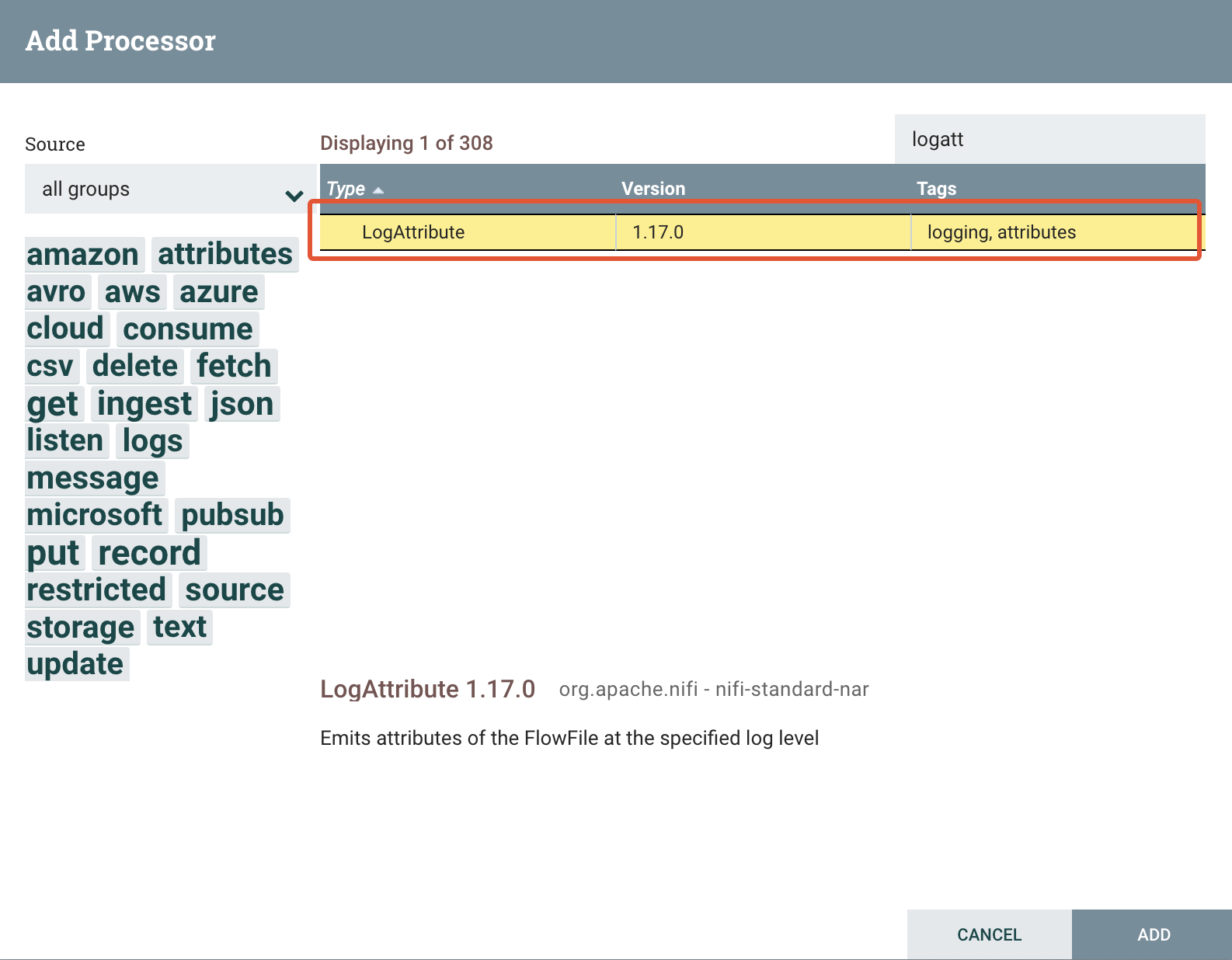

- Add a Processor of type LogAttribute; this component will emit attributes of the FlowFile for a predefined log level.

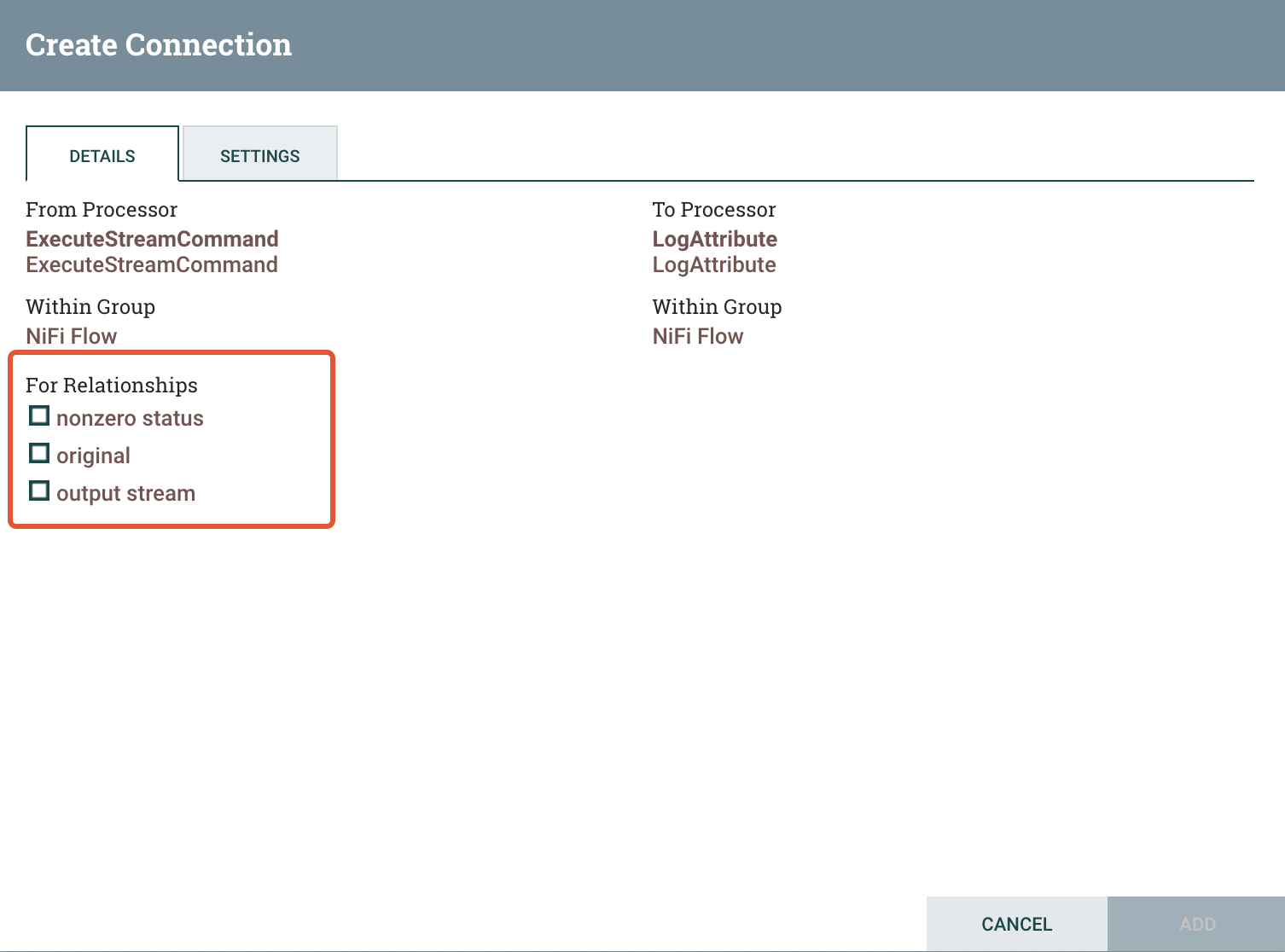

Then drag a connection between the ExportMysqlDump and the LogAttribute Processors, and in the Create Connection popup let’s define two new relationships: original and nonzero status. The former is the original queue message that was processed from the Processor and the latter bears the potential errors (non zero results) that were thrown during this step of the workflow. Every relationship will inject a dedicated queue in the workflow. Repeat the same steps for the ReplaceDefinersCommand Processor. For ImportMySqlDump and LogAttribute Processors, activate all 3 available relationship options. The output stream will log the successful results of our import workflow step.

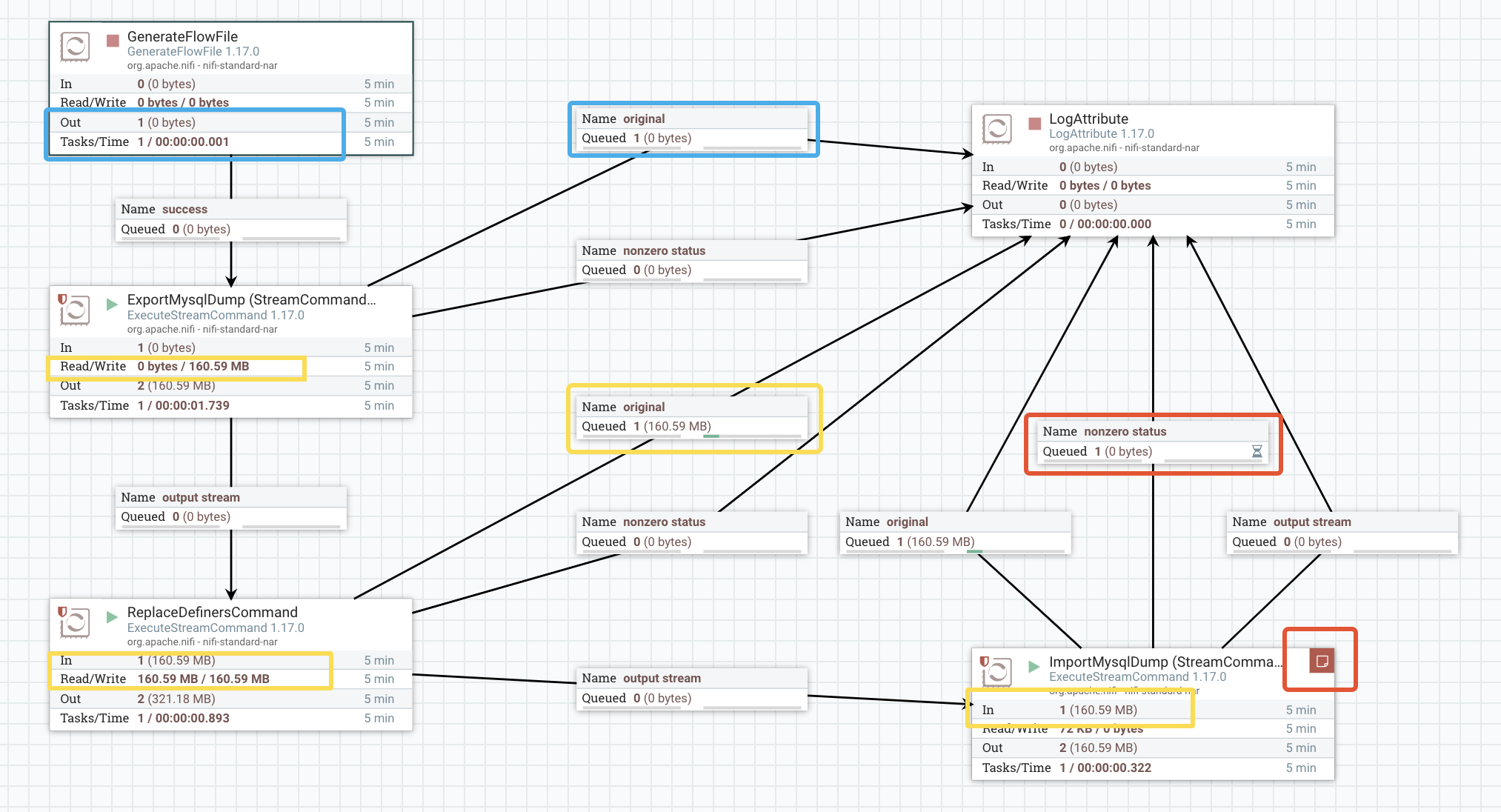

Eventually, our LogAttribute Processor and its dependencies should now look like this on the canvas:

6. Start the Processors. As you will notice on the left-hand upper corner of every Processor on the canvas appears a stop sign. That means that the Processors will not execute any commands even if we kick off a new instance of the workflow. In order to start them press, for every single one of them — except LogAttribute, the start button marked with blue in the picture below:

Configure the Apache Nifi Server

At this point we are not ready yet to run our workflow. The Apache Nifi server is lacking two additional resources. The two ExecuteStreamCommand Processors will execute an export and import from and to remote MySQL instances using the mysql-client, but the Apache NiFi container doesn’t have any knowledge of this package. We have to connect to our container and install the required client.

Let's connect first to the Apache Nifi container as root:

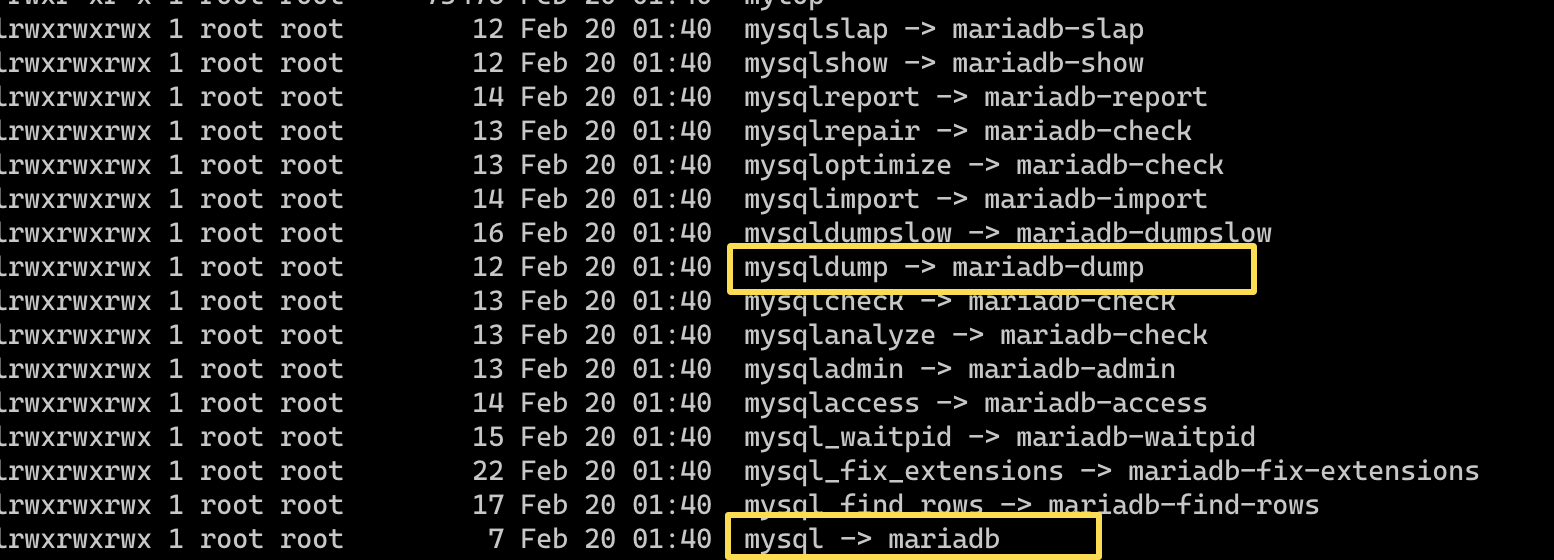

docker exec -it -u 0 nifi /bin/bashand install the client (in this case is the mariadb-client package):

apt-get update -y

apt-get install -y mariadb-clientA quick sanity check to make sure that everything is in place. For that matter go to /usr/bin/ and make sure you that mysqldump and mysql are properly symlinked:

Next we have to copy to the Apache Nifi container the SSL certificate we downloaded from the Open Telekom Cloud console.

docker cp ca-bundle.pem nifi:/usr/binAttention

For the time being, let's skip the step above in order to simulate an error in the migration workflow and we will come back later to this.

Start a Migration Workflow

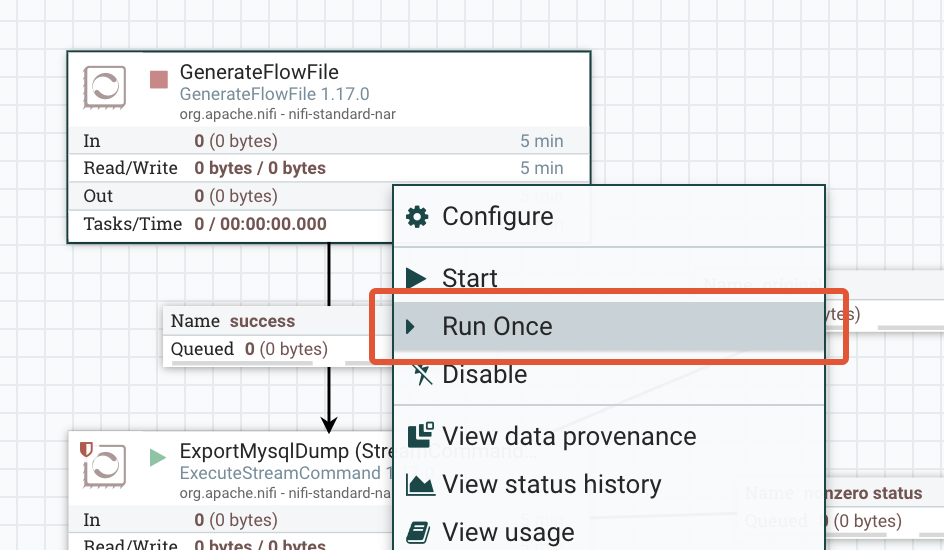

Open the cascading menu of the GenerateFlowFile component and click Run Once:

The current active Processor will be marked with this sign on right-hand upper corner on the canvas:

Let’s see what happened and if the migration went through, and if no how could we debug and trace the source of our problem. The canvas now will be updated with some more data in every Processor and Queue:

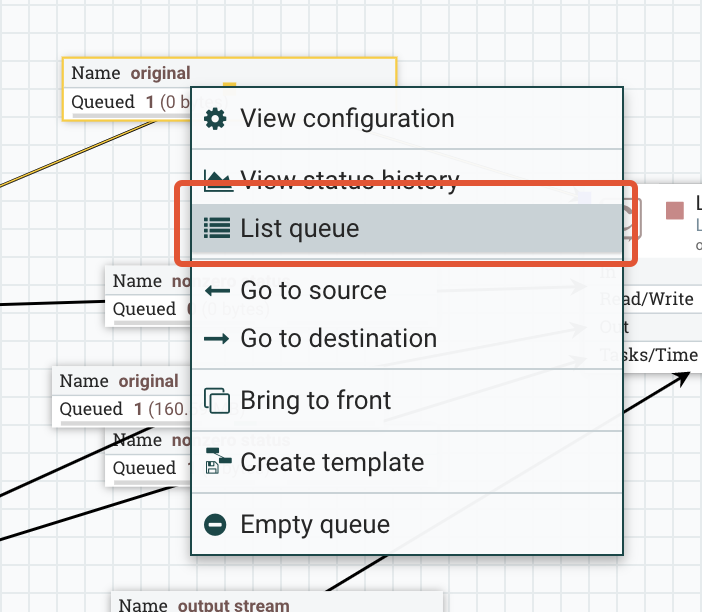

GenerateFlowFile Processor is informing us that has sent 1 request down the pipeline (Out 1 — in box marked in blue). The ExecuteMysqlDump Processor ran successfully and wrote out a dump in the size of 160.59MB. Its logging queues show us that we have a new entry in original and zero entries in nonzero status. (The latter indicates that the Processor ran without any error). Let’s see what was written in the original queue. Open the queue:

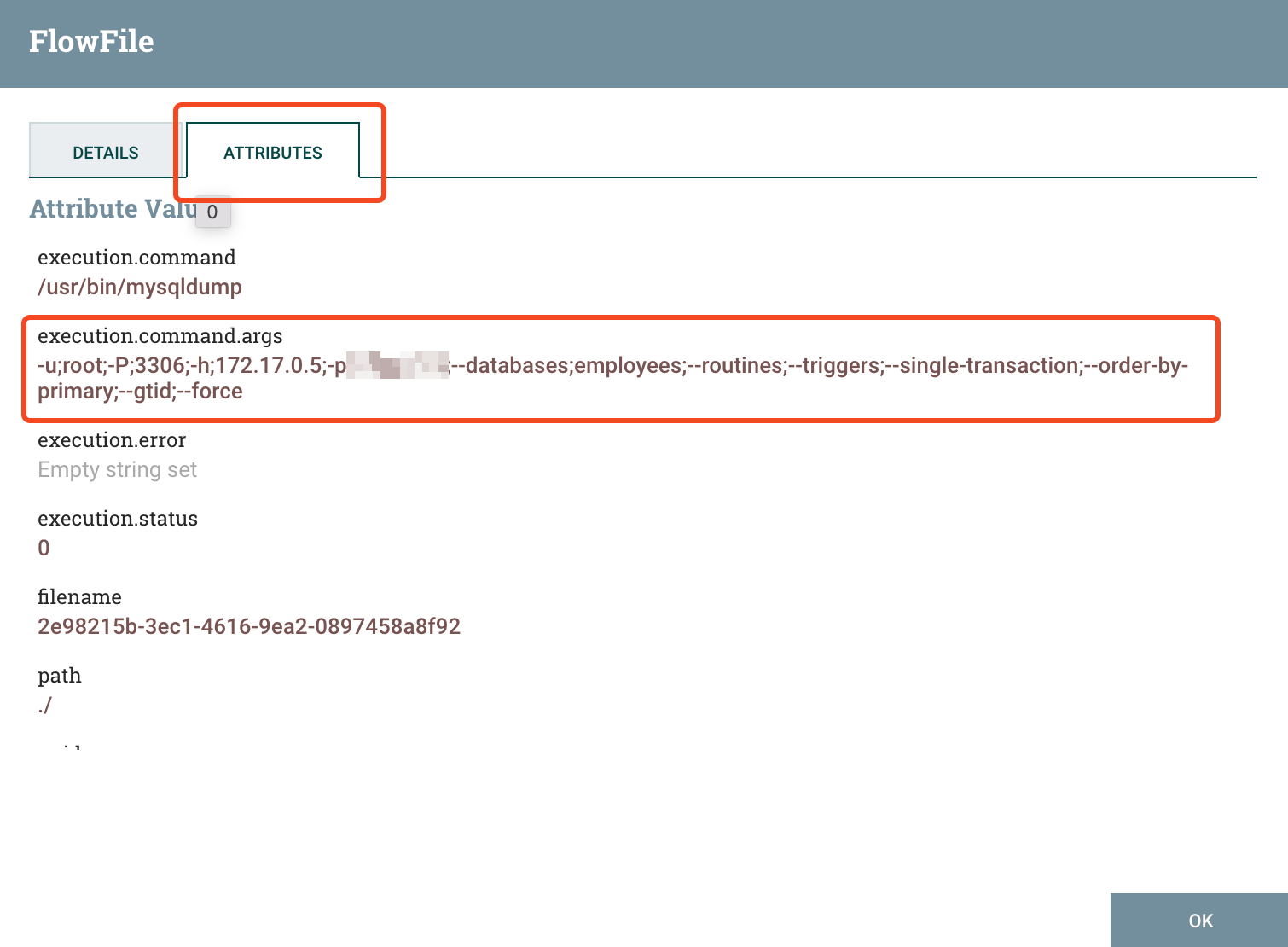

and under the Properties tab of the Queue, we can see which command was executed by our Processor:

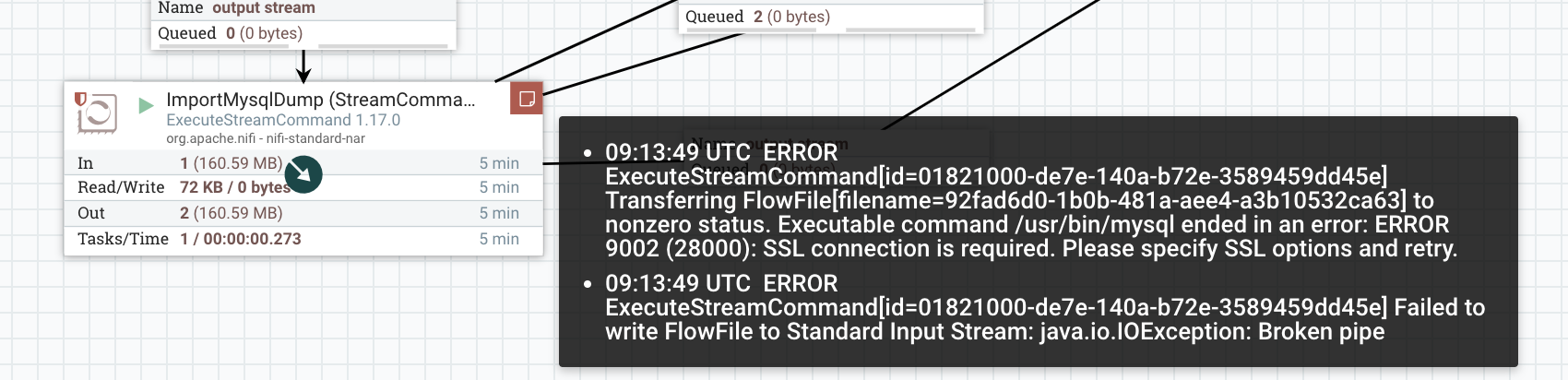

Now let's focus on the second ExecuteStreamCommand Processor, the one that is responsible to import the dump to the target database. We can see that it received an input of 160.59MB (that is our dump file, generated from the previous Processor); it pushed it down in the original queue but it seems that migration didn’t go through as planned, because we have items in the nonzero status queue. As a first step finding the culprit, we will inspect in the original queue (open the List Queue and pick the element that corresponds to this very workflow instance under the Details tab). We can either inspect the generated dump file that was handed over by the ExportMysqlDump Processor by either viewing or download it,

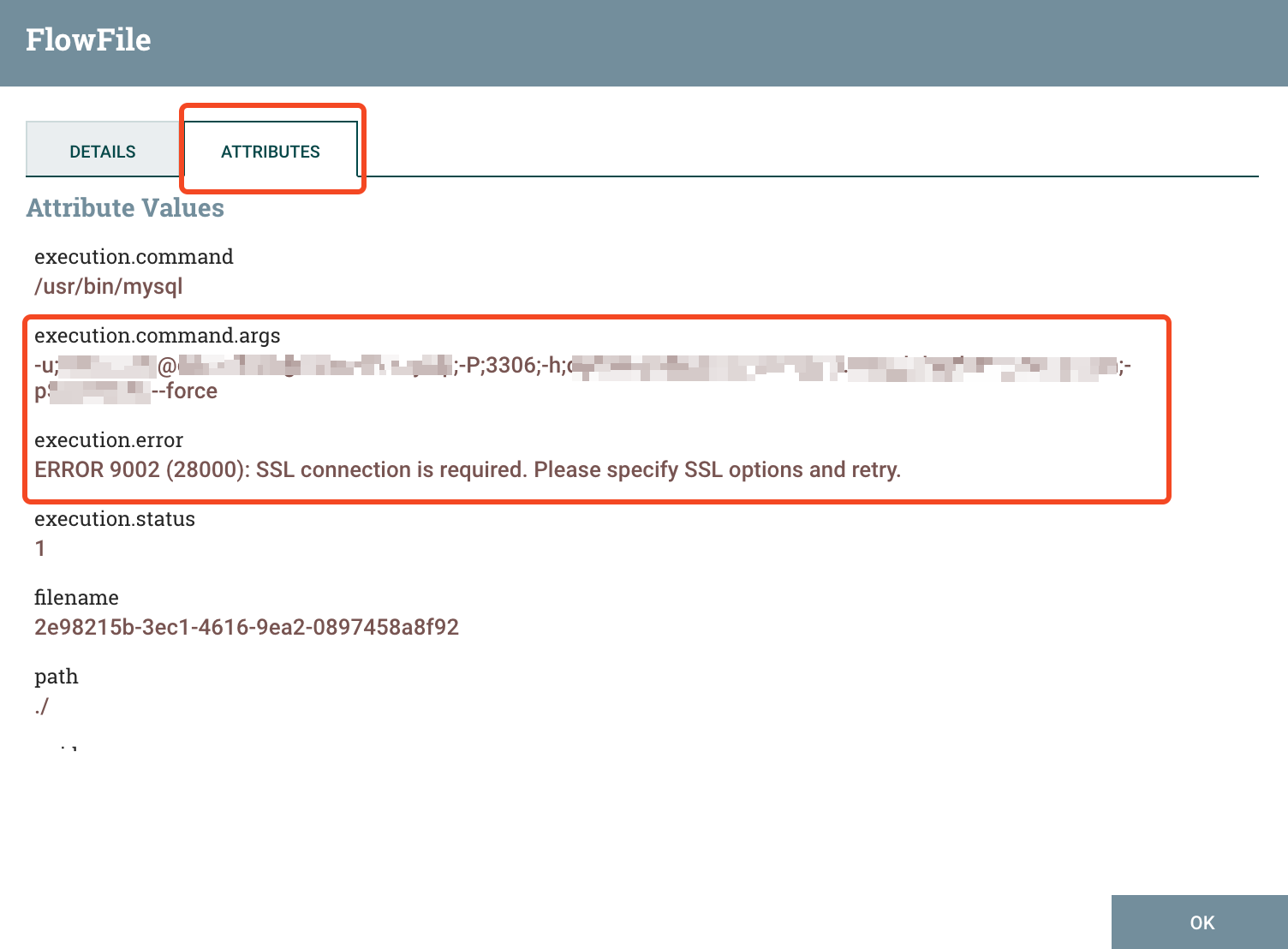

or inspect the command that was executed to see if there is a helpful error message (in our case there is one):

A faster way though, figuring out what went wrong, is hovering over the red sign (that will appear in case of error) in the upper right-hand corner of our Processor that threw the error:

Now that we saw how we can, in principle, debug and investigate errors during the execution of our workflows, go back to previous chapter guidelines and, this time, do copy the SSL certificate to the Apache Nifi container.

We are now set to start a new migration instance. You will observe that after a while the ImportMysqlDump Processor goes in execution mode, for the small sign on the right upper-hand corner that indicates the active threads currently running on this component. After a while, when the workflow will:

- not have any more active threads in any processor

- have an additional message in the outcome queue of the ImportMysqlDump Processor

- have no additional messages in the nonzero status queue of the ImportMysqlDump Processor

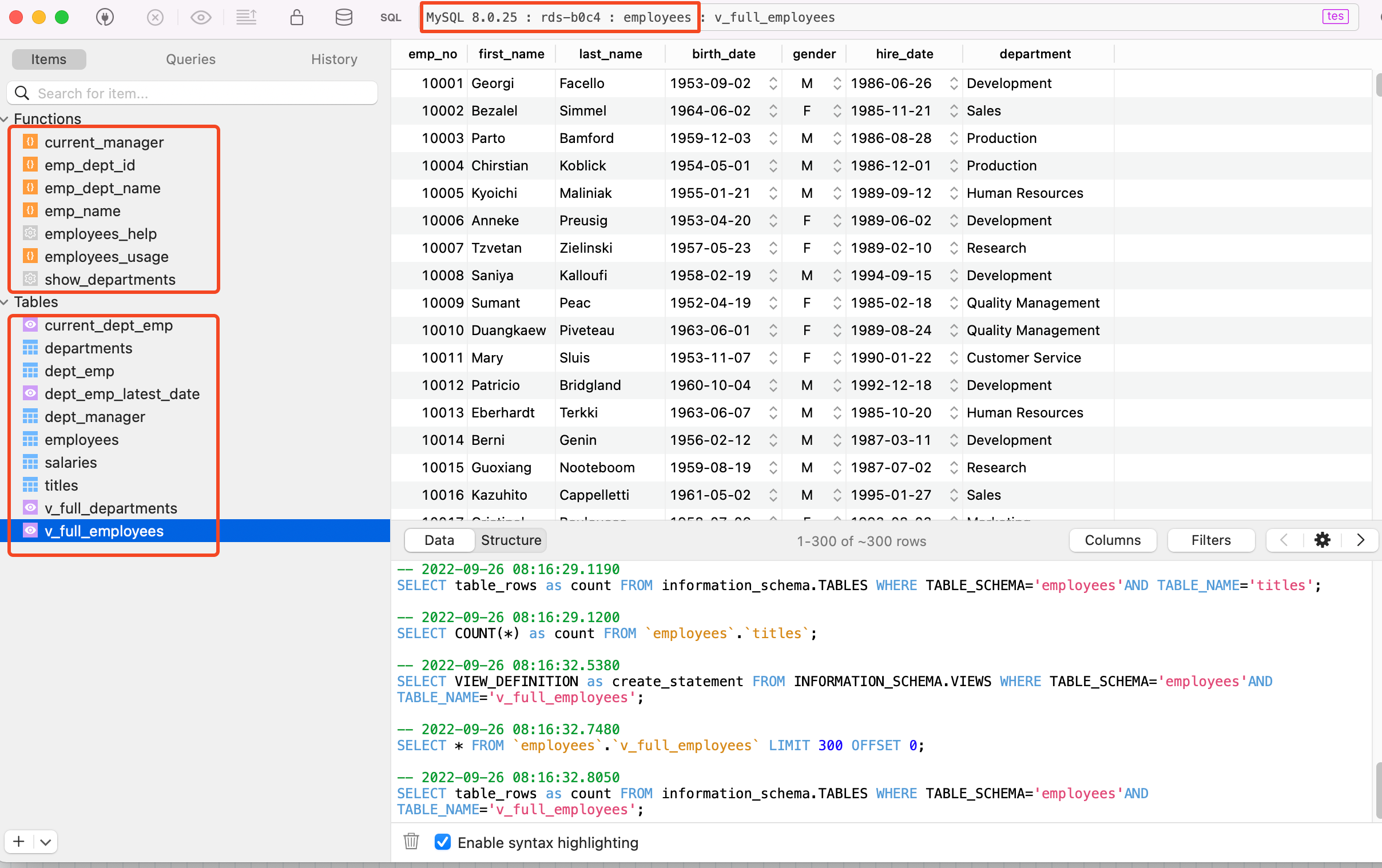

then check your database — the migration would have successfully completed: